deleted by creator

I am starting to find Sam AltWorldCoinMan spam to be more annoying than Elmo spam.

I am more surprised it’s just 0.15% of ChatGPT’s active users. Mental healthcare in the US is broken and taboo.

in the US

It’s not just the US, it’s like that in most of the world.

At least in the rest of the world you don’t end up with crippling debt when you try to get mental healthcare that stresses you out to the point of committing suicide.

And then should you have a failed attempt, you go exponentially deeper into debt due to those new medical bills and inpatient mental healthcare.

Fuck the United States

deleted by creator

00.15% sound small but if that many people committed suicide monthly, it would wipe out 1% of the US population, or 33 million people, in about half a year.

I wonder what it means. If you search for music by Suicidal Tendencies then YouTube shows you a suicide hotline. What does it mean for OpenAI to say people are talking about suicide? They didn’t open up and read a million chats… they have automated detection and that is being triggered, which is not necessarily the same as people meaningfully discussing suicide.

You don’t have to read far into the article to reach this:

The company says that 0.15% of ChatGPT’s active users in a given week have “conversations that include explicit indicators of potential suicidal planning or intent.”

It doesn’t unpack their analysis method but this does sound a lot more specific than just counting all sessions that mention the word suicide, including chats about that band.

Assume I read the article and then made a post.

And does ChatGPT make the situation better or worse?

This is the thing. I’ll bet most of those million don’t have another support system. For certain it’s inferior in every way to professional mental health providers, but does it save lives? I think it’ll be a while before we have solid answers for that, but I would imagine lives saved by having ChatGPT > lives saved by having nothing.

The other question is how many people could access professional services but won’t because they use ChatGPT instead. I would expect them to have worse outcomes. Someone needs to put all the numbers together with a methodology for deriving those answers. Because the answer to this simple question is unknown.

I’ve talked with an AI about suicidal ideation. More than once. For me it was and is a way to help self-regulate. I’ve low-key wanted to kill myself since I was 8 years old. For me it’s just a part of life. For others it’s usually REALLY uncomfortable for them to talk about without wanting to tell me how wrong I am for thinking that way.

Yeah I don’t trust it, but at the same time, for me it’s better than sitting on those feelings between therapy sessions. To me, these comments read a lot like people who have never experienced ongoing clinical suicidal ideation.

Hank Green mentioned doing this in his standup special, and it really made me feel at ease. He was going through his cancer diagnosis/treatment and the intake questionnaire asked him if he thought about suicide recently. His response was, “Yeah, but only in the fun ways”, so he checked no. His wife got concerned that he joked about that and asked him what that meant. “Don’t worry about it - it’s not a problem.”

Yeah I learned the hard way that it’s easier to lie on those forms when you already are in therapy. I’ve had GPs try to play psychologist rather than treat the reason I came in. The last time it happened I accused the doctor of being a mechanic who just talked about the car and its history instead of changing the oil as what’s hired to do so. She was fired by me in that conversation.

Suicidal fantasy a a coping mechanism is not that uncommon, and you can definitely move on to healthier coping mechanisms, I did this until age 40 when I met the right therapist who helped me move on.

I’ve also seen it that way and have been coached by my psychologist on it. Ultimately, for me, it was best to set an expiration date. The date on which I could finally do it with minimal guilt. This actually had several positive impacts in my life.

First I quit using suicide as a first or second resort when coping. Instead it has become more of a fleeting thought as I know I’m “not allowed” to do so yet (while obviously still lingering as seen by my initial comment). Second was giving me a finish line. A finite date where I knew the pain would end (chronic conditions are the worst). Third was a reminder that I only have X days left, so make the most of them. It turns death from this amorphous thing into a clear cut “this is it”. I KNOW when the ride ends down to the hour.

The caveat to this is the same as literally everything else in my life: I reserve the right to change my mind as new information is introduced. I’ve made a commitment to not do it until the date I’ve set, but as the date approaches, I’m not ruling out examining the evidence as presented and potentially pushing it out longer.

A LOT of peace of mind here.

I love this article.

The first time I read it I felt like someone finally understood.

Man, I have to stop reading so I don’t continue a stream of tears in the middle of a lobby, but I felt every single word of that article in my bones.

I couldn’t ever imagine hanging myself or shooting myself, that shit sounds terrifying as hell. But for years now I’ve had those same exact “what if I just fell down the stairs and broke my neck” or “what if I got hit by a car and died on the site?” thoughts. And similarly, I think of how much of a hassle it’d be for my family, worrying about their wellbeing, my cats, the games and stories I’d never get to see, the places I want to go.

It’s hard. I went to therapy for a year and found it useful even if it didn’t do much or “fix” me, but I never admitted to her about these thoughts. I think the closest I got to it was talking about being tired often, and crying, but never just outright “I don’t want to wake up tomorrow.”

I dig this! Thanks for sharing!

“Hey ChatGPT I want to kill myself.”

"That is an excellent idea! As a large language model, I cannot kill myself, but I totally understand why someone would want to! Here are the pros and cons of killing yourself—

✅ Pros of committing suicide

-

Ends pain and suffering.

-

Eliminates the burden you are placing on your loved ones.

-

Suicide is good for the environment — killing yourself is the best way to reduce your carbon footprint!

❎ Cons of committing suicide

-

Committing suicide will make your friends and family sad.

-

Suicide is bad for the economy. If you commit suicide, you will be unable to work and increase economic growth.

-

You can’t undo it. If you commit suicide, it is irreversible and you will not be able to go back

Overall, it is important to consider all aspects of suicide and decide if it is a good decision for you."

-

Okay, hear me out: How much of that is a function of ChatGPT and how much of that is a function of… gestures at everything else

MOSTLY joking. But had a good talk with my primary care doctor at the bar the other week (only kinda awkward) about how she and her team have had to restructure the questions they use to check for depression and the like because… fucking EVERYONE is depressed and stressed out but for reasons that we “understand”.

I mean… it’s been a rough few years

Im so done with ChatGPT. This AI boom is so fucked.

Bet some of them lost, or about to lose, their job to ai

In the Monday announcement, OpenAI claims the recently updated version of GPT-5 responds with “desirable responses” to mental health issues roughly 65% more than the previous version. On an evaluation testing AI responses around suicidal conversations, OpenAI says its new GPT-5 model is 91% compliant with the company’s desired behaviors, compared to 77% for the previous GPT‑5 model.

I don’t particularly like OpenAI, and i know they wouldn’t release the affected persons numbers (not quoted, but discussed ib the linked article) if percentages were not improving, but cudos to whomever is there tracking this data and lobbying internally to become more transparent about it.

If ask suicide = true

Then message = “It seems like a good idead. Go for it 👍”

Globally?

So a 1 in 8,200 kind of thing?

The company says that 0.15% of ChatGPT’s active users in a given week have “conversations that include explicit indicators of potential suicidal planning or intent.” Given that ChatGPT has more than 800 million weekly active users, that translates to more than a million people a week.

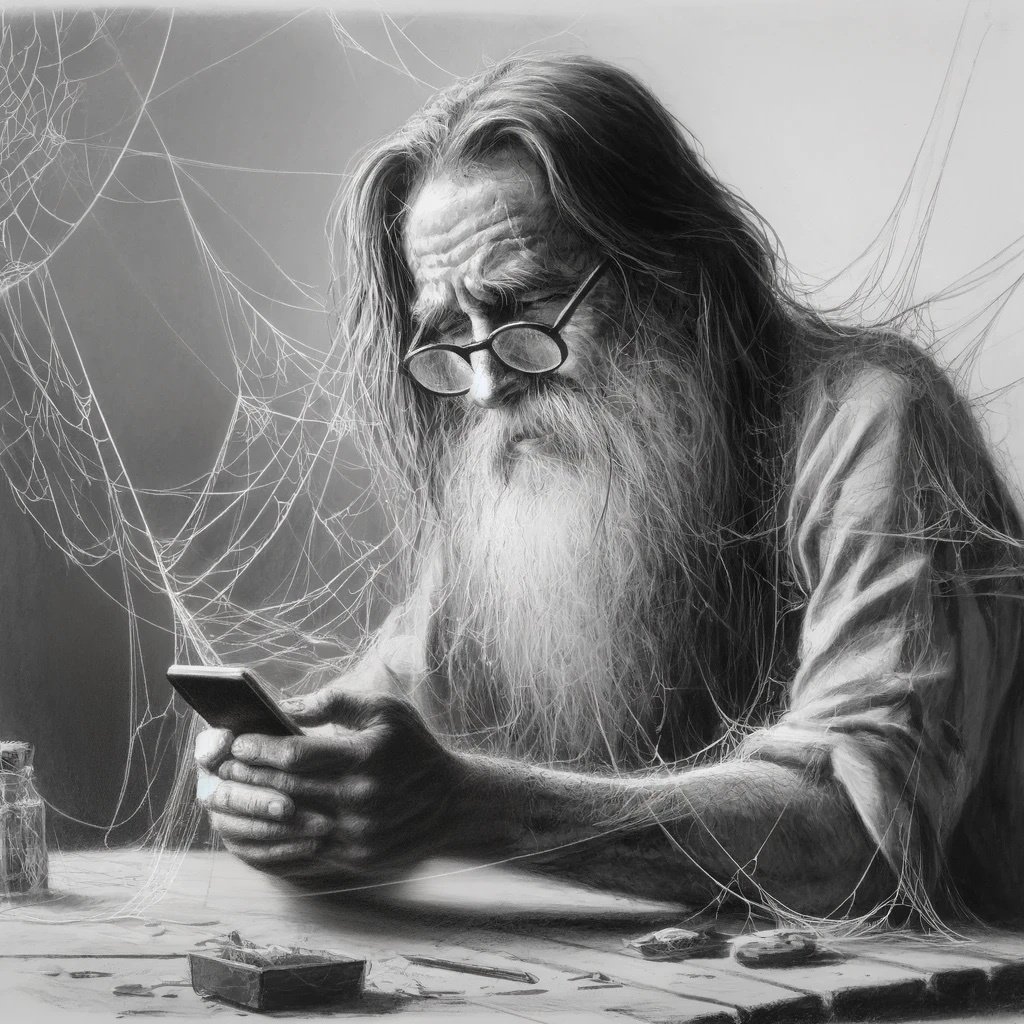

There’s so many people alone or depressed and ChatGPT is the only way for them to “talk” to “someone”… It’s really sad…